Transcribing my grandma's memoirs with LLMs

Background

Some time ago, my 93-year-old grandma took on a project to write down her her memoirs from her childhood. She is a very avid story-teller and somehow always manages to surprise us with new, interesting stories even from her early youth, where we might have imagined that she had already told us everything. Her capacity for remembering even the tiny details is really quite impressive, and we had often suggested that she should write some of them down. Eventually, she took on the project and apparently got quite excited about it as well: She told us that she would lose the track of time and sometimes keep writing until 2 am, and when I asked to see them after a while, she had already written down 70 pages. I then suggested that I could write them down on a computer so that they would be easier to read and share with others in the family, and she gave them to me to transcribe.

The problem setup

However, after writing down the first few pages, it became clear that it would be quite a bit of work: The handwriting was not always very clear and had some mistakes, and required me to guess a lot of words from the context. She also did not go to a lot of schools in her youth, and her written Finnish it not quite standard in a couple of different ways, making the transcription process quite tricky and subject to some interpretation even after the handwriting itself was deciphered. Overall, the challenge was that she wrote the text in a way that was very similar to how she speaks, and Finnish spoken language is quite different from the written language, especially so if spoken with an old accent. For instance, there was no punctuation, the word ordering was often non-standard and some of the words were not really used anymore (requiring me to either search on the internet or call and ask her when the internet didn’t know). The narration of the story itself turned out to be often brilliant in my opinion, but the text is not really directly readable for a native Finnish speaker without quite a bit of effort. In that sense, writing the text in an easily readable format is more of a language translation task than a simple OCR task, and ended up taking me roughly 35 hours, half an hour per page.

She took a break from writing for a while, but recently she started again, and wrote another 70 pages. The first 70 pages was about her childhood in the 1930s in Karelia, with a lot of detail on her family escaping the front lines and becoming refugees inside Finland during World War II, and ended with the end of the war. The second batch is about her life after the war, settling down in my home town, getting jobs, having children, and so on.

First attempts at automation

I managed to transcribe the first 20 pages of the second batch, at which point I got an idea: Could I just automate this process by taking pictures of the handwritten pages and giving them as input to GPT-4? It seemed sensible enough, considering that regular OCR in itself would be doomed to failed due to the issues listed before. I took a picture of a page with my phone camera, and tried it out with an accompanying prompt explaining the context and asking the model to make the best guess at what the original text was. This did not work at all, and it only outputted some sort of pseudo-Finnish, looking something like:

Ei pojkea lohkeilta noekkeinöllä aurgoon. Tattuu että sinä oloox

semossa haamersa. Tiaassi turpaa thomas ässä kehuutalaisan poikas.

Kui halvitaqa orvaski? Vai olla saavassa pussa semenssa. hänöä jäli

sano että äkun tämä taimen tultaa että oli kuollut. Tait sina

I then figured that maybe with more context information, it could make smarter inferences. I tried a few things, including giving the entire previous page (already written down by me) as context, but this did not work either, and instead it just started hallucinating a kind of clichéd love story based on the previous page. I then figured that probably the main bottleneck here is the quality of the initial information extracted from the images, given that it should clearly be possible to do a better job just based on the image itself. After googling for a while, I found Transkribus, an OCR service built for handwriting recognition, and they have a free trial on their website. The OCR transcriptions provided from there were not still not perfect, but clearly contained correct information about the original text. Now the text looked something like (perhaps interesting for Finnish readers):

jotkn työ paikaa lähtettiin marsinall. kenganä

ostoon. Samassahänsano, Kävisin tuossa ihanlähellä väkä-

kulmaharvoja vähänvärialauttoa veisitkö, olla tässä senääkaaraala

sano, että hänkö täti sano, että eikein tämä toinen jotta äiti oli

mennä...

I then tried giving this as input to GPT-4 with a prompt explaining the context, and it worked quite well: I got some readable text out of it, with the experience being somewhat similar to me actually reading the original handwritten text, without the problem of having to figure out the handwriting:

Jotkut työpaikalta lähtettiin marsinnalla kenkäostoon. Samassa hän sanoi,

"Kävisin tuossa ihan lähellä, väkäkulmaharjoja vähän väriä laittaa, veisitkö?

Olla tässä sen aikaa kaaralla," sanoi, että hänkö tätini sanoi, että eikö

tämä toinen, jotta äiti oli mennyt...

It was, however, kind of clear that this would not be good enough to be very useful in practice. Given that I had decided to go with an LLM, I figured that I could make this into a small machine learning / LLM prompt engineering project. Since I had already transcribed the first 20 pages of the new batch, I could use those as ground-truth examples for validating the results of different automated transcription methods I would try. Another thing on my mind was that perhaps I could use some of the ground-truth examples to do in-context learning with the LLM, so that it would learn to refine the text in a similar way as I do. Perhaps it could even be possible to fine-tune some small open-source LLM for the task. The next part was then figuring out a comprehensive plan.

Data Collection

Given that the main issue with the initial attempt seemed to be the quality of the initial OCR transcription, I figured that I should put some effort into getting high-quality images of the pages. The problem was that when I did this, it was still winter and most of the day was really dark. The solution was that I did have a really bright lamp meant for dealing with seasonal affective disorder (depression due to darkness in the winter). I had never really used it for the original purpose, but it turned out to be great for this task since it gave a very even light for all parts of the paper. I took 2 pictures of each page to be sure at least one of them would be good enough.

OCR stage

I tried to search for the best OCR system for this kind of task, and found a post saying that Google Cloud Vision was better than open-source alternatives. Trying to fine-tune some open-source model with my hand-written transcriptions also crossed my mind, but then decided to just go with Google Cloud Vision as a quick method. Perhaps it would be good to return back to this stage later on to maximize the amount of information we directly extract from the document.

The LLM

For the LLM, I decided to stick with GPT-4 / GPT-4-Turbo API (and GPT-3.5 for cheaper experimentation), since it was overall the best model for Finnish at least at the time when I started playing around with this. To save money and time, I mostly performed the experiments with GPT-3.5-Turbo, with the intention of using GPT-4 mainly to bump up the performance in the end.

Evaluation metrics

For the evaluation metrics, I ended up with the following:

- The edit distance / edit ratio between the generated text and my transcriptions (possible to get for the first 20 pages).

- The cosine similarity between embeddings of the generated text and my transcriptions. (embeddings provided by the OpenAI API)

The edit distance seems like the easiest to interpret, but it has the problem that the transcriptions I had done so far were the ones with altered sentence structures and changed words. It would be quite unlikely that the LLM would end up generating something that matched exactly with that. The cosine similarities try to get around this, capturing the semantic similarity of the texts, and not just whether they exactly match.

Variance of the metrics There was quite a bit of variance in the metrics for different pages and different runs, so I decided to evaluate each model type with 10 different pages, and for each page try 4 times. After a bit of experimentation, it seemed that this resulted in quite stable results, and I could then compare the different methods more reliably.

Side note on ground-truth data: In retrospect, I probably should have written down the direct transcriptions without the translation aspect while doing the original transcriptions. This would have made it possible to only measure performance of the method just for the OCR task, and there the edit distance would make more sense.

Initial prompt-tuning

The basic prompt I used was the following:

The following lines contain the outputs of an OCR system applied to the memoirs

written by an old Finnish person. The OCR system is not perfect, and the lines

contain errors. Please correct the errors, and try to make your best guess at

what the original text was. Write only the corrected text:\n------\n

In addition to this, I wanted to try out a couple of directions:

- Writing the prompt in Finnish, in case switching between languages is confusing the model

- Adding some of the names of the people and places mentioned in the text as context (I noticed that this actually )

- Adding an example of some ground-truth transcriptions to the prompt as an in-context learning example

- Adding an example of the original OCR output - ground-truth pair as an in-context learning example

- Suggesting the model to try really hard (“You are a master at transcribing Finnish text in this manner. This is extremely important to me and my family…”)

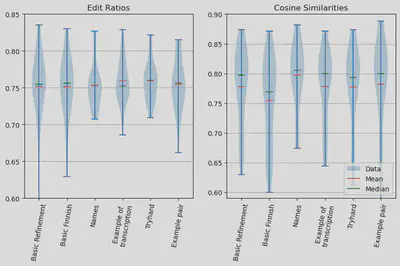

The results are in the following plot:

It seemed that adding the names and places as context was the only one that actually had a clear improvement on the results (the cosine similarity, the mean edit ratio doesn’t seem affected). Interestingly, the prompt in Finnish degraded the results a bit. Perhaps the model is simply better at following instructions in English, even on Finnish-language related tasks. The example-of-transcription, try-hard and in-context example prompts did shift the numbers a bit, but very small amounts. I decided to stick with the names and places as context for all future experiments. I also tried to add the previous ground-truth page as context at this stage, but found that it didn’t really seem to help at all.

Adding more signal in the context

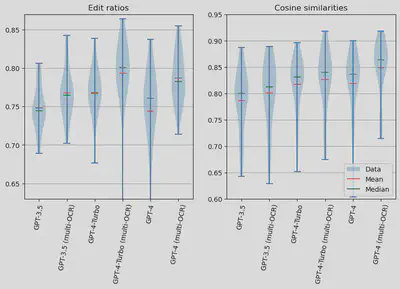

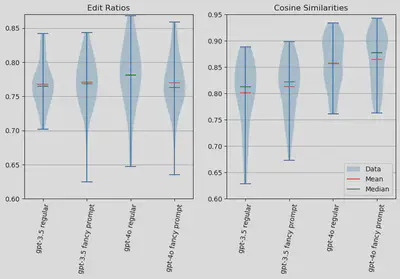

I then spent some time browsing through the transcriptions so far, and noticed that the quality of the initial OCR stage was still clearly bottlenecking the performance. Quite often, the individual words were simply still incorrect, because the initial Google Cloud Vision OCR output happened to be really unclear. I then got the idea that maybe we could consider the OCR output as a noisy measurement of the true underlying text, and the LLM postprocessing as solving a Bayesian inference task, trying to denoise the data. From this point of view, having multiple noisy OCR measurements should also make the posterior more peaked around the true value. So I then tried adding two OCR transcriptions to the LLM input (initially obtained from two separate pictures I took for each page), and edited the initial prompt accordingly. At this stage, I also tried GPT-4 and GPT-4-Turbo to see how much better would they be. It turns out that this strategy worked really well 1:

GPT-4 and GPT-4-Turbo were clearly better, but more importantly adding more OCR versions clearly does improve performance! 2 I also tried adding more than 2 OCR outputs in the input, but the performance didn’t increase after that, and started clearly decreasing at 4 versions of the OCR transcriptions. There is some discrepancy in the edit ratios vs. embedding similarities, and we will analyse this a bit further in the next section.

At this point, I also noticed that GPT-4 is kind of expensive and I hit my 10 dollar OpenAI compute credit budget quite fast :). The problem is that evaluating these models robustly requires a lot of generations to get any statistical significance, and running a lot of evals with 40 calls to GPT-4 does accumulate the price. Luckily, however, the GPT-4o model was published in the meantime, and it turns out to work about as well as GPT-4, and is a bit cheaper. I put in another 10 dollars for the final experiments.

Meta-prompting

I had tried a lot of other stuff as well (some listed at the end of the post), but they didn’t seem to work very well. I then turned to something that doesn’t require any effort from me and apparently should improve results a bit: Asking Chat-GPT to give me a better prompt based on prompt I was using. It came up with this:

You will be provided with multiple versions of OCR transcriptions from handwritten memoirs written by a Finnish grandmother. The text is in Finnish and contains several characters and place names that might appear frequently. The OCR output is often messy and includes errors. The original handwritten text may also have grammar mistakes. Your task is to refine the text by correcting the OCR errors and any grammar mistakes while making your best guess at what the original text intended to convey.

Here are some key characters and place names that may appear in the text:

- **Characters**:

- Tyyne:...

- **Place Names**:

- Sukeva:...

Please follow these instructions carefully:

1. Review all versions of the OCR text provided, and combine the information from them to get a single corrected text.

2. Correct any OCR errors and grammar mistakes.

3. Use context to make educated guesses for unclear sections.

4. Write only a single corrected text in Finnish, without any additional explanations or any other text.

Here are the OCR transcriptions:

Definitely more structured than my original pure string of text without any newlines! Let’s see if it affects the results:

It seems seems that the structured prompt does slightly increase the embedding similarities, but annoyingly the edit ratio goes down a little bit. To investigate, I tracked down a case where the edit ratio was better with the old prompt and the embedding similarity was better with the new prompt. The check confirmed the following differences:

- The old prompt did follow the OCR transcription more, whereas the new prompt went a bit further in creating good-quality Finnish text and changing the sentence structures when appropriate.

- The new prompt contained overall more of the correct information about the story than the old prompt (e.g., ‘mother’ changing to ‘father’ with the old prompt)

- The new prompt output was also structured into paragraphs, whereas the old prompt output was just a block of text. This may affect the results slightly, since my ground-truth did have some newlines in it.

So the prompt structure definitely does make a difference, although choosing which is better gets a bit subtle. I decided to go with the new prompt, although probably I should think about the evaluation metrics or go through the outputs manually a bit more to be totally sure that it is better.

Outcome

Anyways, the LLM input/output at this point looked something like:

Original OCR output:

Ei muuta kun kuokkaa Lapiokäteen jo ajo alko syntya

dimme jo kaivaaneet puolet oli pehmästä maata äitini läht

pois, iltä kyllähän homma hartuu aiti sano, että pitäähän

Eyja tekifaillé, olla kahvitunti lähtikahvin keittoon

My ‘ground-truth’ transcription:

Ei muuta kun kuokka ja lapio käteen, ja alkoi oja syntyä. Oli

pehmeätä maata. Olimme jo kaivaneet puolet, kun äitini lähti pois.

Äiti sanoi, että “kyllähän homma hoituu, ja pitäähän työntekijällä

olla kahvitunti”.

GPT-4 output:

Ei muuta kuin kuokka ja lapio käteen, joilla työ alkoi sujua.

Olimme kaivaneet puolet, oli pehmeää maata. Äitini lähti pois,

sanoen, että kyllähän homma sujuu. Äiti sanoi, että pitäähän

työntekijöillä olla kahvitauko, lähtien keittämään kahvia

It’s a bit tricky to convey the difference without assuming knowledge in Finnish, but the generated text is clearly more readable and closer to the original text. The content is also almost the same, although one part was read slightly incorrectly (although in a plausible way). GPT-4 also wrote the last sentence potentially slightly better than I did, although my transcription was closer to the original text in this case. There is also a legitimate uncertainty of whether one part should be the GPT-4 interpretation or my interpretation. To give a rough idea, I tried running each through Google Translate to English, with the following outputs:

Original OCR output through Google Translate:

All you have to do is hoe and the drive to Lapiokäte is already born

the half we had already dug was from the soft ground my mother left

away, things will get busy in the evening, you say that you have to

Eyja tekifaillé, to be coffee hour went to the coffee soup

My ground-truth transcription through Google Translate:

All you have to do is pick up a hoe and a shovel, and a ditch began

to appear. There was soft ground. We had already dug half when my

mother left. The mother said that "yes, the job will be done, and

the employee must have a coffee hour".

GPT-4 output through Google Translate:

Nothing but a hoe and a shovel in hand, with which the work began

to flow. We had dug half of it, it was soft ground. My mother left,

saying that it would be fine. Mother said that the workers must

have a coffee break, to make coffee

In the last two cases, the output word order looks a bit funny in English, but this is just because Google Translate doesn’t do a very good job of translating from the quite liberal word order sometimes used in Finnish. This example was towards the more succesful end, and sometimes it does still misinterpret some parts. Overall, it does seem to work quite well, although not totally reliable.

Conclusion and further ideas

The results are still not quite perfect, but the quality is good enough to be useful. To summarise the positive results:

- I adding hand-crafted context through including the names of characters and locations clearly helps the performance

- Adding multiple versions of the OCR transcriptions for each page allows the LLM to guess better the true underlying text, and clearly improves performance

- Using a nicely structured prompt probably helps a bit, and asking an LLM to get such a prompt is pretty easy

- Unsurprisingly: The latest models (GPT4/GPT4-Turbo/GTP4o) show clearly better performance than GPT-3.5.

Overall, I think I will now try running the model as is through the entire text and maybe do some manual corrections from there so that I don’t end up postponing writing the actual text forever. However, there would be a lot of cool things to try out still, for instance:

- Adding the previous page as context to the LLM input, to see if it can utilize the context information better. I actually tried this, but it didn’t seem to help in the initial OCR output -> refinement task. My guess is that somehow the model has to focus on just guessing the individual words and sentences due to the messiness of the OCR output, and doesn’t really get to use the fancy context information that effectively. However, if I added another layer of refinement where the LLM output (now easily readable for the LLM) is corrected based on the context of the previous page.

- Another try at in-context learning by adding an example of an original OCR output - ground-truth pair as context to the LLM. Yes, I tried a version of this and didn’t get a clear improvement, but maybe I should have curated the examples more carefully, instead of just adding the entire page of OCR-output followed with the entire page of the ground-truth transcription. Probably should cut the examples into smaller pieces and have them one after the other.

- Human in the loop. It is likely that for the later pages, the manual context I added in the form of names is not as useful, since new characters and locations are introduced. Would be interesting to figure out a methodology for semi-automatically getting the optimal set of context-information, e.g, having the LLM suggest potential names and places based on the text.

- Could be an interesting project to try to fine-tune a small open-source LLM for the task. Although I’m not sure if there exist very good open-source LLMs that are good for Finnish yet, and also I don’t have a very large amount of data.

- Chain-of-thought reasoning would be really interesting to see, although that would take quite bit more compute and care when setting up. E.g., the model could be instructed to go through different possibilities for figuring out the OCR and make decisions based on the previous context.

- Evaluating the evaluation metric. A problem noticed towards the end was that the edit distance and cosine similarities didn’t always agree. In fact, earlier on I tried even using the small embedding model by OpenAI, and that didn’t always agree with the large model that I did the final evaluations with. Thus, figuring out which evaluation metric actually corresponds with we want is not trivial. One scheme for evaluating the metric itself would be to show a human (me, in practice) pairs of transcriptions and have the human decide which one is better. Then, we could look at which evaluation metric correlates the best with the human evaluation.

Other failed ideas

- I also tried adding more than 2 OCR outputs in the input, but the performance didn’t increase after that, and started clearly decreasing at 4 versions of the OCR transcriptions.

- I tried running the model through the refined LLM outputs another time, to see if iterating on the same text multiple times improves performance. This helped initially with GPT-3.5, but made results worse with GPT-4. Although I didn’t try a variant where I include the original OCR output as well as the initial GPT-4 output in the input. Perhaps that would be still better.

Lessons learned

I will also try to summarize here some of the higher-level lessons that I’ve personally learned:

- Signal. When doing something like this where we try to improve an imperfect piece of text, it is important to try to get as much signal from the original data as possible, e.g., through adding multiple noisy measurements of the text to the context, or working on improving the original OCR output.

- Multilinguality vs. English. Prompting LLMs (at least these GPT models) with English on a Finnish language task works better than prompting to do the same task in Finnish. But I’m not sure what’s the right mental model here. Is it just better at following instructions in English? Is the model somehow just more capable/intelligent when having English text in the input?

- Having a scheme for evaluation evaluation. As mentioned already, when using automatic evaluation schemes, it is probably a good idea to evaluate the evaluation metric itself, and at least do some sanity checks to see if it actually corresponds to what we want. And this is probably a good idea to do periodically during a project to make sure that we are not overfitting on some quirk of the metric itself. This in turn would require work to set up some easy-to-use scheme for human evaluation in the initial stages of the project.

- Curated context information. Adding compressed and hand-crafted context information in the form of names and places clearly helped the performance, whereas attempts with adding the entire previous page or examples of transcription didn’t help as straightforwardly. Thinking about and experimenting on what kinds of context information is required for the task at hand will probably pay off.

- Simplification with larger models. Some tricks may work on smaller models (GPT-3.5), but larger and better models make them reduntant (GPT-4). With GPT-3.5, refining the text through the model twice improved the quality a bit, but on GPT-4, the quality was directly better on the first step, and a second step decreased the quality.

- Inspecting the mistakes made to improve the model. Regardless of whether the evaluation metric you have works well, it is probably a good idea to look at the outputs / inputs of the model to see what kinds of mistakes it does. This can give ideas for new experiments. For me, this kind of thing resulted in figuring out that the signal from the original data was a bottleneck, or in some cases it was translating the text to English, for instance 1. With LLMs, it is probably easy to come up prompt-tuning tricks that alleviate the mistakes you notice the model is making.

Although, when first trying out GPT-4, performance dropped significantly. Funnily enough, it turned out that it had started translating the text into English, and also sometimes started the message with some chat-bot like additional comments (‘Yeah, of course I can do this for you!…’). So seems like GPT-4 just had a slightly stronger inclination to give specific types of answers than just follow the instructions. This was mostly fixed by adding the phrase ‘Write only the corrected text after the OCR transcription, in Finnish, without anything else’ to the prompt. ↩︎ ↩︎

There are a couple of outliers in the plots, which are due to similar issues where the format of the output is totally off. ↩︎